14 Machine learning methods for classification

14.1 Introduction

The classification problem is a very common one in practice, and we have already seen the use of GLMs to systematically predict binary response variables. We have also used clustering to perform unsupervised learning, where we do not have any information about correct labels for data points. We now turn to supervised classification problems and introduce two different approaches: a Bayesian one and a tree-based one.

14.2 Naive Bayes classifier

Suppose that we wish to classify an observation into one of K classes, which means there is a response variable Y can take on K different values, or labels. Let \(π_k\) be the prior probability that a randomly chosen observation comes from the k-th class. Let \(f_k(X) =Pr(X|Y = k)\) be the density function of X for an observation that comes from the k-th class.

Assuming we have the prior probabilities and the conditional probability distributions of the observations within each category \(k\), we can use Bayes’ theorem to compute the probability of each class, given a set of observations \(x\) by turning around the conditionality:

\[ P(Y = k | X = x) = \frac{\pi_k f_k(x)}{\sum_i^K \pi_i f_i(x)} \] And let us use the notation \(p_k (x) = P(Y = k | X = x)\) to mean the posterior probability that an observation \(x\) belongs to class \(k\).

Let us use the penguin data as an example, where we want to classify the observations by species. Then, if we take a training set with known classifications, we can take the prior probabilities \(\pi_k\) to be the fractions of observed birds of each species, and the probability distributions of each explanatory variable for each species \(f_k(X)\) can be estimated from the observed distributions of the explanatory variables (flipper lengths, etc.) for Adelie, Gentoo, and Chinstrap subsets of observations.

The difficult part in the above example is estimating the distributions \(f_k(X)\), which is especially challenging for joint distributions of multiple variables. One method, called Linear Discriminant Analysis, assumes the distributions have the same covariance matrices for all classes and only differ in their mean values. Another, called Quadratic Discriminant Analysis, assumes different covariance matrices for different classes.

The Naive Bayes classifier instead assumes that within each class the explanatory variables \(X_i\) are independent, and thus

\[ f_k(x) = f_{k1} (x_1) \times f_{k2} (x_2) \times ... \times f_{kn} (x_n) \]

where \(f_{ki}(x_i)\) is the probability distribution of the i-th explanatory variable \(x_i\) for class \(k\).

This modifies the Bayes’ formula to look like this:

\[ P(Y = k | X = x) = \frac{\pi_k f_{k1} (x_1) \times f_{k2} (x_2) \times ... \times f_{kn} (x_n)}{\sum_i^K \pi_i f_{k1} (x_1) \times f_{k2} (x_2) \times ... \times f_{kn} (x_n)} \]Although it looks more complicated, we can compute each distribution function separately, so as long as there is enough data in the training set to estimate each explanatory variable for each class, the calculation is manageable.

14.2.1 Naive Bayes penguin example

Here is an example of using tidymodels for classification using Naive Bayes.

First, we load and clean the data, then split the observations into training and test sets.

We then define a model specification using the parsnip package. Here is how to set up a Naive Bayes model:

Next we define the recipe, which specifies the data tibble and which variables will be explanatory (predictors) and which will be the response variable. In this case we will use species as the response variables, and all other variables, except for island as predictors.

Then we define the workflow, which combines the model with the recipe:

Finally, we use this workflow on the data set to fit the response variable on the training set:

We can examine the contents of the fitted model, such as parameters, using extract_fit_parsnip. In the case of Naive Bayes, it returns the calculated mean and standard deviations for each variable, by class:

Now we can use the parameters from the parameters from the fitted model to predict the species in the test set. The function augment performs the prediction and adds a new column called .pred_class to the data frame. Then we can compare the truth with predictions using a confusion matrix or an accuracy score:

14.2.2 Breast cancer data

Here is an data set of breast cancer samples, where the classification of each sample is given in variable Class as either “benign” or “malign”. The other variables contain observations that we can use to classify the samples.

First, we clean and split the data set:

Next, we can use the same model specification nb_spec, define a new recipe and apply it to the data, and fit the model:

Predict the classification of samples in the test set and compare the truth with predictions using a confusion matrix and an accuracy score:

14.3 Decision Trees

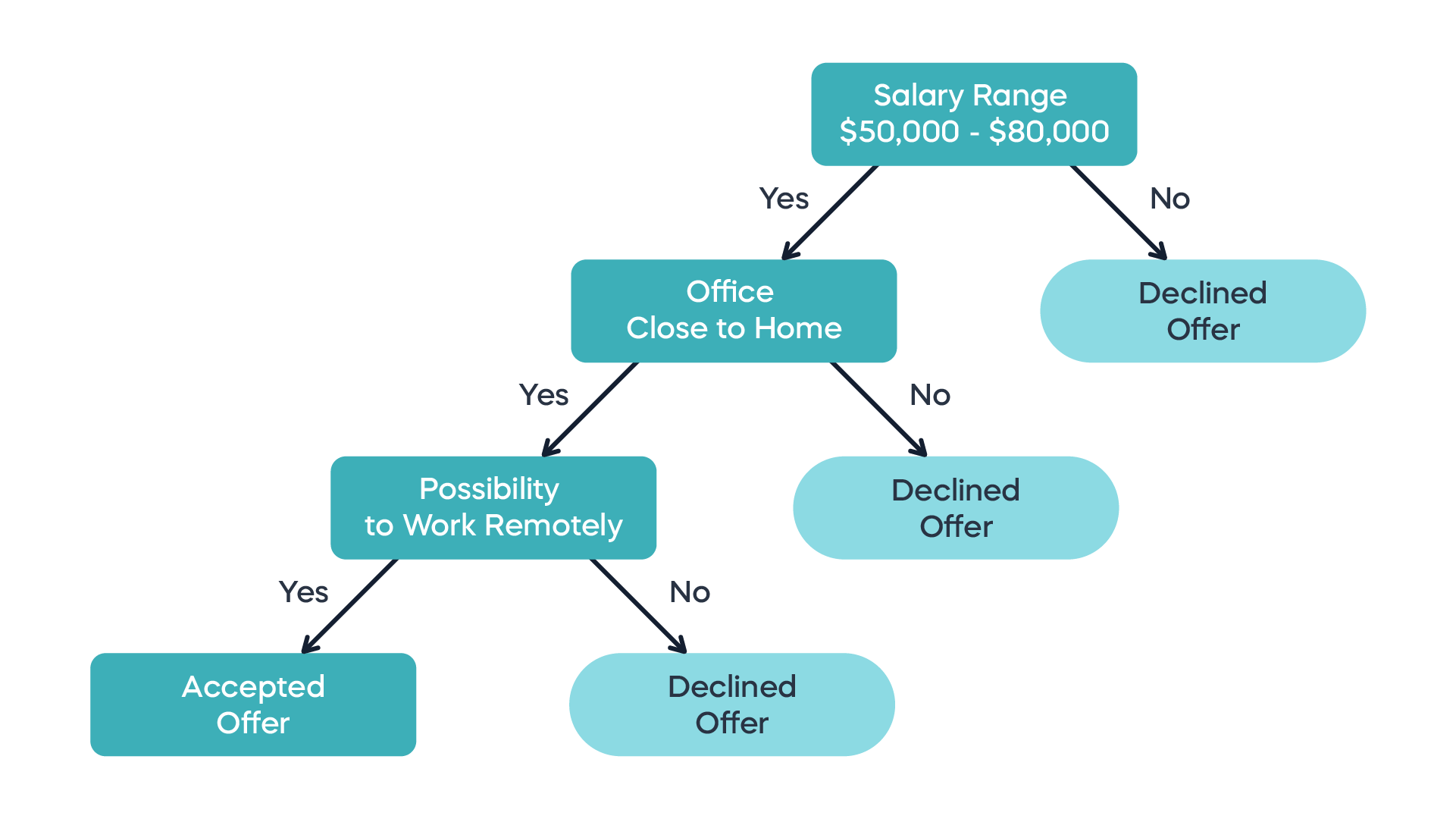

Suppose instead that we represent the classification process as a sequence of binary choices, that eventually lead to a category. This can be represented by a decision tree, whose internal nodes are separators of the space of observations (all the values of explanatory variables) that divide it into regions, and the leaves are the labels of these regions. (Decision trees can also be used for quantitative response variables, but we will focus on classification.) For example, here is a a decision tree for accepting a job offer:

Building a decision tree for classification happens by sequential splitting the space of observations, starting with the decision that gives the most bang for the buck. Let us define the quality of the split into \(M\) region by calculating how many observations in that regions actually belong to each category \(k\). The Gini index (or impurity) is defined as the product of the probability of an observation (in practice, the fraction of observations in the training set) being labeled correctly with label \(k\) (\(p_k\)) times the probability of it being labeled incorrectly (\(1-p_k\)), summed over all the labels \(k\):

\[ G = \sum_k (1-p_k)p_k \]

Alternatively, one can use the Shannon information or entropy measure:

\[ S = -\sum_k p_k log(p_k) \]

Notice that both measures are smallest when \(p_k\) is close to 1 or 0, so they both tell the same story for a particular region: if (almost) all the points are points are either classified or incorrectly, these measures are close to 0.

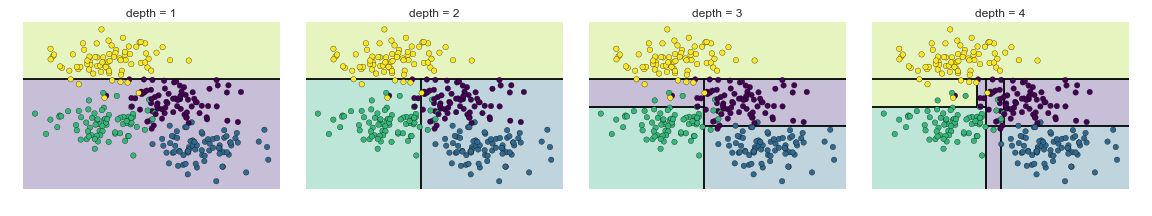

One of these measures is used to create the sequential splits in the training data set. The biggest problem with this decision tree method is that it’s a greedy algorithm that easily leads to overfitting: as you can see in the figure above, it can create really complicated regions in the observation space that may not correspond to meaningful distinctions.

14.3.1 Penguin data

Define the model specifications for decision trees:

Create a recipe, apply the model and fit it on the training set:

Predict the classification for the test set and validate the predictions:

Make a plot of the best-fit decision tree:

14.3.2 Breast cancer data

Using the same model tree_spec, create a recipe, apply the model and fit it on the training set:

Predict the classification for the test set and validate the predictions:

Make a plot of the best-fit decision tree:

14.4 Random Forests

Overfitting usually results from modeling meaningless noise in the data instead of real differences. This gave rise to the idea to “shake up” the algorithm and see if the splits it produces are robust if the training set is different. In fact, let’s use multiple trees and look at what the consensus of the ensemble can produce. This approach is called bagging, which makes use of an random ensemble of parallel classifiers, each of which over-fits the data, it combines the results to find a better classification. An ensemble of randomized decision trees is known as a random forest.

Essentially, the process is as follows: use random sampling from the data set (bootstrapping) to generate different training sets and train different decision trees on each. Then for each observation, find its consensus classification among the whole ensemble; that is, how does the plurality of the trees classify it.

Since each data point is left out of a number of trees, one can estimate an unbiased error of classification by computing the “out-of-bag” error: for each observation, used the classification produced by all the trees that did not have this one points in the bag. This is basically a built-in cross-validation measure.

14.4.1 Penguin data

Define the model specification for random forests:

Create a recipe, apply the model and fit it on the training set:

Predict the classification for the test set and validate the predictions:

Calculate the “last fit” of the entire data set:

Plot the importance measures of different variables:

14.4.2 Cancer data

Using the same model rf_spec, create a recipe, apply the model and fit it on the training set:

Predict the classification for the test set and validate the predictions:

Calculate the “last fit” of the entire data set:

Plot the importance measures of different variables: